How to Deploy Private AI Applications Across Enterprise Environments

At Tensor9, we’re focused on solving one of the biggest challenges in enterprise AI adoption: how to deliver AI securely inside customer environments without rebuilding your existing SaaS product or drowning in operational complexity.

Tensor9’s new free-to-use Customer Playground lets you deploy a sample app—including an AI model and chat interface—into your own AWS account in just a few minutes, offering a realistic glimpse into the power of “private SaaS.”

To highlight the Tensor9 vendor experience that makes this all possible, we just released a new demo video that walks through how Tensor9 enables SaaS and AI vendors to deploy and operate private, ChatGPT-like apps directly in enterprise customers’ AWS accounts. Below is a recap of the key capabilities and experience for vendors. Check out the full video to learn more.

Why Enterprises Need Private AI

Enterprises in regulated industries such as banking, healthcare, and government, cannot rely on public SaaS AI services due to strict data privacy and compliance requirements. To adopt generative AI, these organizations need systems that run entirely within their own cloud environments. That means most off-the-shelf SaaS AI tools are off the table.

Tensor9 provides a platform for software vendors to deploy and operate SaaS applications in customer accounts without building custom deployment tooling or standing up dedicated operations teams.

How Tensor9 Works

At the core of Tensor9 is a digital twin model: Applications are described via infrastructure-as-code (IaC) such as AWS CloudFormation or Terraform. Tensor9 ingests this IaC and produces a deployable appliance that can be instantiated in a customer’s own AWS account.

For each customer environment, Tensor9 maintains a digital twin in the vendor’s own cloud. This twin is a miniature representation and mirrors the structure of the customer deployment, enabling observability without direct access to customer data. Logs, metrics, and deployment status flow through the twin, while data and workloads remain isolated within the customer’s account.

Application Onboarding

The demo showcases a private chat application built on:

Amazon ECS Fargate for container orchestration

Open WebUI with local LLM models

A network load balancer and VPC networking

The original CloudFormation template for this stack is ingested into Tensor9. The platform converts it into a reusable appliance, which can then be deployed in any customer AWS account without modification.

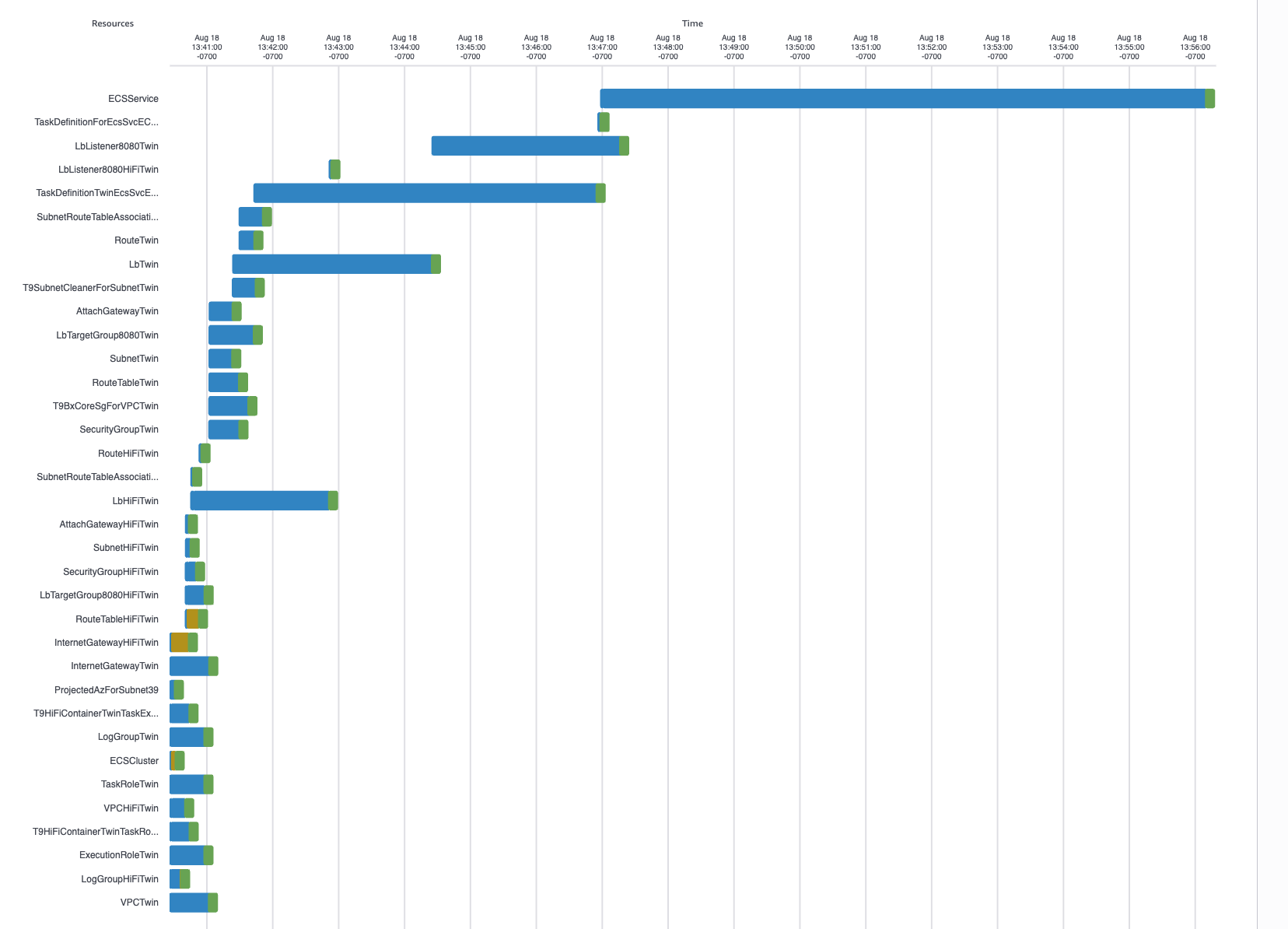

AWS console events timeline view of the deployment of resources into the twin stack

Testing and Validation

Before distributing updates to customers, vendors can create test appliances in their own AWS accounts. This supports safe validation of:

New model versions

Infrastructure modifications

Performance optimizations

For example, rather than introducing risk by updating a live customer environment, the complete stack can be deployed and verified in an isolated test account.

Customer Deployment Experience

From the customer perspective, deployment is fully automated: They connect their AWS account. Tensor9 provisions the infrastructure stack directly into their environment. Within ~15 minutes, a production-ready AI system is available. The system is self-contained. All inference runs locally, without external API calls or data transfer outside the customer’s AWS account.

Operational Model

Operations are performed through the standard AWS console and tooling. Via the digital twin, vendors can view ECS tasks, CloudWatch metrics, and application logs as if they were operating their own infrastructure. However, sensitive customer data remains private.

In the demo, we observe high CPU utilization during peak model usage. This was identified through the digital twin in CloudWatch metrics.

Continuous Updates

Infrastructure changes follow a release workflow:

Modify the IaC template (e.g., add Redis via ElastiCache).

Validate the change in a test appliance.

Execute a Tensor9 release, which propagates the change to all customer environments (as configured and allowed by customers).

In the example shown, adding a Redis caching layer reduced CPU utilization by 60% and improved response latency — with zero downtime and no manual customer coordination.

Summary

Tensor9 enables software vendors to:

Package applications as deployable appliances from existing IaC.

Operate customer environments via a digital twin model that preserves data isolation.

Test and validate updates in controlled environments before release.

Deliver continuous improvements across distributed customer deployments without manual intervention.

This approach allows enterprises to run private AI systems entirely within their own clouds, while vendors maintain the ability to manage and support those deployments at scale, without rebuilding their existing products.

Watch the full demo for a detailed walkthrough of the system.

If you’re interested in exploring the end customer experience of deploying an app privately, you can check out our new Customer Playground and deploy an app into your own account.

We’ll soon be launching a vendor playground to enable self-service trials of the Tensor9 vendor experience. In the meantime, reach out to us and we can help you get started.